Prepared by:

HALBORN

Last Updated 02/06/2025

Date of Engagement: November 7th, 2024 - December 6th, 2024

Summary

100% of all REPORTED Findings have been addressed

All findings

8

Critical

1

High

2

Medium

2

Low

0

Informational

3

Table of Contents

- 1. Introduction

- 2. Assessment summary

- 3. Test approach and methodology

- 4. Risk methodology

- 5. Scope

- 6. Assessment summary & findings overview

- 7. Findings & Tech Details

- 7.1 Incompatibility with blobs lead to halt of block processing

- 7.2 Missing chargefee modifier allows spamming the cl

- 7.3 Mismatch between proposer selection algorithm

- 7.4 Potential non-determinism issue in finalizeblock

- 7.5 Lack of pubkey integrity checks

- 7.6 Floating pragma

- 7.7 Use of custom errors

- 7.8 Contracts pause feature missing

1. Introduction

Story engaged Halborn to conduct a security assessment on their Cosmos project, beginning on November 07th, 2024, and ending on December 06th, 2024. The security assessment was scoped to the smart contracts provided to Halborn. Commit hashes and further details can be found in the Scope section of this report.

2. Assessment Summary

The team at Halborn assigned one full-time security engineer to assessment the security of the merge requests. The security engineers are blockchain and smart-contract security experts with advanced penetration testing, smart-contract hacking, and deep knowledge of multiple blockchain protocols.

The purpose of this assessment is to:

Ensure that the Golang components and Smart Contracts operate as intended.

Identify potential security issues with the Cosmos application and Smart Contracts in scope.

In summary, Halborn identified some improvements to reduce the likelihood and impact of risks, which were mostly addressed by the Story team. The main recommendations were the following:

Implement compatibility with common features in Geth such as blobs.Charge fees accordingly to prevent from DoS attacks or trash transactions.Proper error handling implementations.Use algorithms applied in CometBFT.

3. Test Approach and Methodology

Halborn performed a combination of manual and automated security testing to balance efficiency, timeliness, practicality, and accuracy in regard to the scope of the custom modules. While manual testing is recommended to uncover flaws in logic, process, and implementation; automated testing techniques help enhance coverage of structures and can quickly identify items that do not follow security best practices. The following phases and associated tools were used throughout the term of the assessment :

Research into architecture and purpose.

Static Analysis of security for scoped repository, and imported functions. (e.g.,

staticcheck,gosec,unconvert,codeql,ineffassignandsemgrep)Manual Assessment for discovering security vulnerabilities on the codebase.

Ensuring the correctness of the codebase.

Dynamic Analysis of files and modules in scope.

4. RISK METHODOLOGY

4.1 EXPLOITABILITY

Attack Origin (AO):

Attack Cost (AC):

Attack Complexity (AX):

Metrics:

| EXPLOITABILITY METRIC () | METRIC VALUE | NUMERICAL VALUE |

|---|---|---|

| Attack Origin (AO) | Arbitrary (AO:A) Specific (AO:S) | 1 0.2 |

| Attack Cost (AC) | Low (AC:L) Medium (AC:M) High (AC:H) | 1 0.67 0.33 |

| Attack Complexity (AX) | Low (AX:L) Medium (AX:M) High (AX:H) | 1 0.67 0.33 |

4.2 IMPACT

Confidentiality (C):

Integrity (I):

Availability (A):

Deposit (D):

Yield (Y):

Metrics:

| IMPACT METRIC () | METRIC VALUE | NUMERICAL VALUE |

|---|---|---|

| Confidentiality (C) | None (I:N) Low (I:L) Medium (I:M) High (I:H) Critical (I:C) | 0 0.25 0.5 0.75 1 |

| Integrity (I) | None (I:N) Low (I:L) Medium (I:M) High (I:H) Critical (I:C) | 0 0.25 0.5 0.75 1 |

| Availability (A) | None (A:N) Low (A:L) Medium (A:M) High (A:H) Critical (A:C) | 0 0.25 0.5 0.75 1 |

| Deposit (D) | None (D:N) Low (D:L) Medium (D:M) High (D:H) Critical (D:C) | 0 0.25 0.5 0.75 1 |

| Yield (Y) | None (Y:N) Low (Y:L) Medium (Y:M) High (Y:H) Critical (Y:C) | 0 0.25 0.5 0.75 1 |

4.3 SEVERITY COEFFICIENT

Reversibility (R):

Scope (S):

Metrics:

| SEVERITY COEFFICIENT () | COEFFICIENT VALUE | NUMERICAL VALUE |

|---|---|---|

| Reversibility () | None (R:N) Partial (R:P) Full (R:F) | 1 0.5 0.25 |

| Scope () | Changed (S:C) Unchanged (S:U) | 1.25 1 |

| Severity | Score Value Range |

|---|---|

| Critical | 9 - 10 |

| High | 7 - 8.9 |

| Medium | 4.5 - 6.9 |

| Low | 2 - 4.4 |

| Informational | 0 - 1.9 |

5. SCOPE

- /evmengine/keeper/abci.go

- /evmengine/keeper/db.go

- /evmengine/keeper/evmmsgs.go

- /IPTokenStaking.sol

- /PubKeyVerifier.sol

- /UBIPool.sol

- /abci.go

- /app_config.go

- /app.go

6. Assessment Summary & Findings Overview

Critical

1

High

2

Medium

2

Low

0

Informational

3

| Security analysis | Risk level | Remediation Date |

|---|---|---|

| Incompatibility with blobs lead to halt of block processing | Critical | Solved - 12/11/2024 |

| Missing chargeFee modifier allows spamming the CL | High | Solved - 11/23/2024 |

| Mismatch between proposer selection algorithm | High | Solved - 12/10/2024 |

| Potential Non-Determinism Issue In FinalizeBlock | Medium | Solved - 11/25/2024 |

| Lack of pubkey integrity checks | Medium | Solved - 12/04/2024 |

| Floating Pragma | Informational | Solved - 12/11/2024 |

| Use Of Custom Errors | Informational | Acknowledged - 12/09/2024 |

| Contracts Pause Feature Missing | Informational | Acknowledged - 12/10/2024 |

7. Findings & Tech Details

7.1 Incompatibility with blobs lead to halt of block processing

//

Description

The evmengine module is responsible for interacting with Geth's APIs (forkchoiceUpdatedV3 and getPayloadV3) to construct optimistic blocks based on transactions occurring in Geth. These APIs, in their version V3, include compatibility with Ethereum blobs introduced by the relevant EIPs (e.g., EIP-4844). However, there is a critical issue in the implementation of the pushPayload function, which always sets emptyVersionHashes to an empty array of hashes when calling Geth's NewPayloadV3 API.

err = retryForever(ctx, func(ctx context.Context) (bool, error) {

status, err := pushPayload(ctx, s.engineCl, payload)

if err != nil || isUnknown(status) {

// We need to retry forever on networking errors, but can't easily identify them, so retry all errors.

log.Warn(ctx, "Verifying proposal failed: push new payload to evm (will retry)", err,

"status", status.Status)

return false, nil // Retry

} else if invalid, err := isInvalid(status); invalid {

return false, errors.Wrap(err, "invalid payload, rejecting proposal") // Don't retry

} else if isSyncing(status) {

// If this is initial sync, we need to continue and set a target head to sync to, so don't retry.

log.Warn(ctx, "Can't properly verifying proposal: evm syncing", err,

"payload_height", payload.Number)

}

return true, nil // We are done, don't retry.

})

if err != nil {

return nil, err

}The pushPayload function sets emptyVersionHashes to an empty array (make([]common.Hash, 0)), regardless of whether the payload includes blob transactions. This causes a mismatch between the blob hashes in the payload and the expected versioned hashes during validation in Geth's ExecutableDataToBlockNoHash.

func pushPayload(ctx context.Context, engineCl ethclient.EngineClient, payload engine.ExecutableData) (engine.PayloadStatusV1, error) {

sdkCtx := sdk.UnwrapSDKContext(ctx)

appHash := common.BytesToHash(sdkCtx.BlockHeader().AppHash)

if appHash == (common.Hash{}) {

return engine.PayloadStatusV1{}, errors.New("app hash is empty")

}

emptyVersionHashes := make([]common.Hash, 0) // Cannot use nil.

// Push it back to the execution client (mark it as possible new head).

status, err := engineCl.NewPayloadV3(ctx, payload, emptyVersionHashes, &appHash)

if err != nil {

return engine.PayloadStatusV1{}, errors.Wrap(err, "new payload")

}

return status, nil

}This behavior leads to a failure when the ExecutableDataToBlockNoHash function is invoked within Geth. Specifically, this function validates that the blob hashes used in the payload match the versionedHashes provided. Since emptyVersionHashes is always empty, any transaction containing blobs will fail validation, resulting in an error. Consequently, the Cosmos evmengine cannot process such transactions, preventing block verification and generation on the chain.

var blobHashes = make([]common.Hash, 0, len(txs))

for _, tx := range txs {

blobHashes = append(blobHashes, tx.BlobHashes()...)

}

if len(blobHashes) != len(versionedHashes) {

return nil, fmt.Errorf("invalid number of versionedHashes: %v blobHashes: %v", versionedHashes, blobHashes)

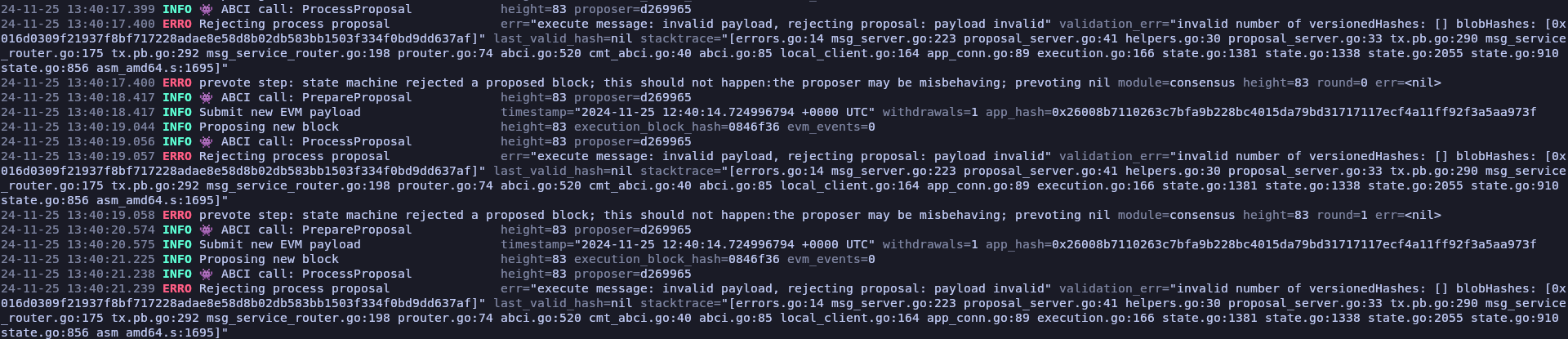

Proof of Concept

After sending a transaction with a blob attached, the chain halts with the following output:

Thus, being unable to process further blocks.

BVSS

Recommendation

It's recommended the following:

Modify the

pushPayloadfunction to correctly populate theversionedHashesparameter with the blob hashes associated with the transactions in the payload.This requires extracting blob hashes from the payload's transactions and ensuring they are passed to Geth's

NewPayloadV3API.

Remediation

SOLVED: The Story team solved this issue by returning an error in case a nil input is received, allowing to process non-nil payloads.

Remediation Hash

7.2 Missing chargeFee modifier allows spamming the CL

//

Description

The removeOperator, redelegate and redelegateOnBehalf do not have the chargeFee modifier. As none of them requires the user sending funds, it allows a malicious user to spam the CL by sending these events, which requires consumption from the CL.

Code Location

function removeOperator(

bytes calldata uncmpPubkey,

address operator

) external verifyUncmpPubkeyWithExpectedAddress(uncmpPubkey, msg.sender) function redelegate(

bytes calldata delegatorUncmpPubkey,

bytes calldata validatorUncmpSrcPubkey,

bytes calldata validatorUncmpDstPubkey,

uint256 delegationId,

uint256 amount

)

external

verifyUncmpPubkeyWithExpectedAddress(delegatorUncmpPubkey, msg.sender)

verifyUncmpPubkey(validatorUncmpSrcPubkey)

verifyUncmpPubkey(validatorUncmpDstPubkey) function redelegateOnBehalf(

bytes calldata delegatorUncmpPubkey,

bytes calldata validatorUncmpSrcPubkey,

bytes calldata validatorUncmpDstPubkey,

uint256 delegationId,

uint256 amount

)

external

verifyUncmpPubkey(delegatorUncmpPubkey)

verifyUncmpPubkey(validatorUncmpSrcPubkey)

verifyUncmpPubkey(validatorUncmpDstPubkey)BVSS

Recommendation

It's recommended to add the chargeFee modifier to them.

Remediation

SOLVED: The Story team fixed this issue as recommended.

Remediation Hash

7.3 Mismatch between proposer selection algorithm

//

Description

The function isNextProposer attempts to determine whether the local node is the next proposer for the upcoming block in a CometBFT consensus system. However, there is a fundamental issue with the logic: it incorrectly applies a simple round-robin algorithm to select the next proposer, while CometBFT uses a weighted round-robin algorithm based on validator voting power. This discrepancy can lead to incorrect proposer selection, which may cause the node to behave incorrectly in the consensus process, potentially leading to missed blocks or other consensus failures:

// isNextProposer returns true if the local node is the proposer

// for the next block. It also returns the next block height.

//

// Note that the validator set can change, so this is an optimistic check.

func (k *Keeper) isNextProposer(ctx context.Context, currentProposer []byte, currentHeight int64) (bool, error) {

// PostFinalize can be called during block replay (performed in newCometNode),

// but cmtAPI is set only after newCometNode completes (see app.SetCometAPI), so a nil check is necessary.

if k.cmtAPI == nil {

return false, nil

}

valset, ok, err := k.cmtAPI.Validators(ctx, currentHeight)

if err != nil {

return false, err

} else if !ok || len(valset.Validators) == 0 {

return false, errors.New("validators not available")

}

idx, _ := valset.GetByAddress(currentProposer)

if idx < 0 {

return false, errors.New("proposer not in validator set")

}

nextIdx := int(idx+1) % len(valset.Validators)

nextProposer := valset.Validators[nextIdx]

nextAddr, err := k1util.PubKeyToAddress(nextProposer.PubKey)

if err != nil {

return false, err

}

isNextProposer := nextAddr == k.validatorAddr

return isNextProposer, nil

}

The code uses a modulo operation (nextIdx := int(idx+1) % len(valset.Validators)) to select the next proposer in a round-robin fashion. However, CometBFT uses a weighted round-robin algorithm that takes into account the voting power of each validator when selecting proposers. This oversight means that validators with higher voting power will not be appropriately prioritized, resulting in an incorrect proposer selection.

BVSS

Recommendation

Modify the proposer selection logic to account for validator voting power in line with CometBFT’s weighted round-robin algorithm, as it is explained in CometBFT’s documentation.

Remediation

SOLVED: The Story team fixed this issue by using the exposed validators functions to check if the local node is the next (predicted) proposer.

Remediation Hash

References

7.4 Potential Non-Determinism Issue In FinalizeBlock

//

Description

There is a possible non-determinism issue in FinalizeBlock. That function must be deterministic per the CometBFT specs. However, there is a call path postFinalize -> isNextProposer -> Validators -> cmtAPI that can incurr in a non-deterministic scenario:

// FinalizeBlock calls BeginBlock -> DeliverTx (for all txs) -> EndBlock.

func (l abciWrapper) FinalizeBlock(ctx context.Context, req *abci.RequestFinalizeBlock) (*abci.ResponseFinalizeBlock, error) {

...

if err := l.postFinalize(sdkCtx); err != nil {

log.Error(ctx, "PostFinalize callback failed [BUG]", err, "height", req.Height)

return resp, err

}

...// PostFinalize is called by our custom ABCI wrapper after a block is finalized.

// It starts an optimistic build if enabled and if we are the next proposer.

//

// This custom ABCI callback is used since we need to trigger optimistic builds

// immediately after FinalizeBlock with the latest app hash

// which isn't available from cosmosSDK otherwise.

func (k *Keeper) PostFinalize(ctx sdk.Context) error {

...

// Maybe start building the next block if we are the next proposer.

isNext, err := k.isNextProposer(ctx, proposer, height)

if err != nil {

return errors.Wrap(err, "next proposer")

}

... // isNextProposer returns true if the local node is the proposer

// for the next block. It also returns the next block height.

//

// Note that the validator set can change, so this is an optimistic check.

func (k *Keeper) isNextProposer(ctx context.Context, currentProposer []byte, currentHeight int64) (bool, error) {

...

valset, ok, err := k.cmtAPI.Validators(ctx, currentHeight)

if err != nil {

return false, err

}

...The reason why it can lead to non-determinism is because the call to the CometBFT API is done through an RPC, which is a network connection, which by definition is non-deterministic between nodes (as some can revert due to timeout, network errors or go on flawlessly). That makes it possible that, under the same input, some nodes would go on whilst others would crash, as the errors are not handled in the FinalizeBlock context but rather bubbled up to the caller, leading to a chain split and the consensus would be broken.

BVSS

Recommendation

Ignore any error returned from PostFinalize in FinalizeBlock.

Remediation

SOLVED: The Story team fixed this issue by skipping optimistic builds if any non-determinism error was encountered.

Remediation Hash

7.5 Lack of pubkey integrity checks

//

Description

The function UncmpPubKeyToCmpPubKey is responsible for converting uncompressed public keys into their compressed format. While it correctly handles the conversion by extracting the x and y coordinates and determining the prefix based on the parity of y, it does not validate whether the provided public key lies on the elliptic curve. This omission can lead to unexpected errors or security vulnerabilities when other modules, such as evmstaking, attempt to use these keys.

func UncmpPubKeyToCmpPubKey(uncmpPubKey []byte) ([]byte, error) {

if len(uncmpPubKey) != 65 || uncmpPubKey[0] != 0x04 {

return nil, errors.New("invalid uncompressed public key length or format")

}

// Extract the x and y coordinates

x := new(big.Int).SetBytes(uncmpPubKey[1:33])

y := new(big.Int).SetBytes(uncmpPubKey[33:])

// Determine the prefix based on the parity of y

prefix := byte(0x02) // Even y

if y.Bit(0) == 1 {

prefix = 0x03 // Odd y

}

// Construct the compressed public key

compressedPubKey := make([]byte, 33)

compressedPubKey[0] = prefix

copy(compressedPubKey[1:], x.Bytes())

return compressedPubKey, nil

}As it can be seen above, the function does not verify whether the x and y coordinates of the uncompressed public key satisfy the elliptic curve equation. Public keys that do not lie on the curve are invalid and can cause cryptographic operations to fail unexpectedly in downstream modules. Since this function is used by other modules, any invalid key processed here could lead to cascading failures or unexpected behavior in those modules.

BVSS

Recommendation

It is recommended to validate whether the used points are in the described curve in order to discard incorrect keys.

Remediation

SOLVED: The Story team solved these issues as it was recommended.

Remediation Hash

7.6 Floating Pragma

//

Description

Smart contracts in scoped folder use the floating pragma >=0.8.23. Contracts should be deployed with the same compiler version and flags that they have been tested with thoroughly. Locking the pragma helps to ensure that contracts do not accidentally get deployed using, for example, either an outdated compiler version that might introduce bugs that affect the contract system negatively or a pragma version too new which has not been extensively tested.

BVSS

Recommendation

Consider locking the pragma version with known bugs for the compiler version by removing the >= symbol. When possible, do not use floating pragma in the final live deployment. Specifying a fixed compiler version ensures that the bytecode produced does not vary between builds. This is especially important if you rely on bytecode-level verification of the code.

Remediation

SOLVED: The Story team fixed this issue by setting 0.8.23 as fixed pragma version.

Remediation Hash

7.7 Use Of Custom Errors

//

Description

Failed operations in several contracts are reverted with an accompanying message selected from a set of hard-coded strings.

In the EVM, emitting a hard-coded string in an error message costs ~50 more gas than emitting a custom error. Additionally, hard-coded strings increase the gas required to deploy the contract.

BVSS

Recommendation

It's recommended to use custom errors instead of hardcoded strings.

Remediation

ACKNOWLEDGED: The Story team acknowledged this issue by stating the following:

Acknowledged, deployment costs are not an issue due to those contracts being predeploys. We will consider using custom errors for mainnet.

7.8 Contracts Pause Feature Missing

//

Description

It was identified that no high-privileged user can pause any of the scoped contracts. In the event of a security incident, the owner would not be able to stop any plausible malicious actions. Pausing the contract can also lead to more considered decisions.

BVSS

Recommendation

It is recommended including the pause feature in the contracts.

Remediation

ACKNOWLEDGED: The Story team acknowledged this issue.

Halborn strongly recommends conducting a follow-up assessment of the project either within six months or immediately following any material changes to the codebase, whichever comes first. This approach is crucial for maintaining the project’s integrity and addressing potential vulnerabilities introduced by code modifications.

Table of Contents

- 1. Introduction

- 2. Assessment summary

- 3. Test approach and methodology

- 4. Risk methodology

- 5. Scope

- 6. Assessment summary & findings overview

- 7. Findings & Tech Details

- 7.1 Incompatibility with blobs lead to halt of block processing

- 7.2 Missing chargefee modifier allows spamming the cl

- 7.3 Mismatch between proposer selection algorithm

- 7.4 Potential non-determinism issue in finalizeblock

- 7.5 Lack of pubkey integrity checks

- 7.6 Floating pragma

- 7.7 Use of custom errors

- 7.8 Contracts pause feature missing

// Download the full report

* Use Google Chrome for best results

** Check "Background Graphics" in the print settings if needed